Abstract

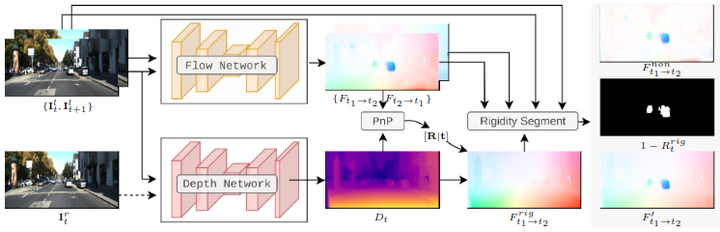

Scene flow estimation in the dynamic scene remains a challenging task. Computing scene flowby a combination of 2D optical flow and depth has shown to be considerably faster with acceptable performance. In this work, we present a unified framework for joint unsupervised learning of stereo depth and optical flow with explicit local rigidity to estimate scene flow. We estimate camera motion directly by a Perspective-n-Point methodfrom the optical flow and depth predictions, with RANSAC outlier rejection scheme. In order to disambiguate the object motion and the camera motion in the scene, we distinguish the rigid region by the reproject error and the photometric similarity. By joint learning with the local rigidity, both depth and optical networks can be refined. This framework boosts all four tasks: depth, optical flow, camera motion estimation, and object motion segmentation. Through the evaluation on the KITTI benchmark, we show that the proposed framework achieves state-of-the-art results amongst unsupervised methods. Our models and code are available at https://github.com/lliuz/unrigidflow.